February 12, 2021 | FABIAN DIMSKI

One might think that in 2021, it has become common knowledge that high-quality data is a necessary prerequisite for successful (digital) processes:

Without (good) data, nothing works in the 21st century.

Therefore, we could expect that by now, every company actually devotes appropriate attention to its data, which it obviously deserves: thus, Data Governance (DG) and Data Quality Management (DQM) being the focus of the management and having become central components of entrepreneurial action; and, of course, companies being equipped with the necessary personnel.

Reality, however, looks different: in many companies, the topic of data quality still ekes out a niche existence, the importance of which is often underestimated.

But unfortunately, in no company in the world does high-quality data just occur by itself; instead, it is always the result of strategic, tactical and operational measures, namely on an organisational, process-related and technical level. In other words, sustainable DG and targeted DQM are complex challenges.

Along with financial aspects, this is perhaps one of the reasons why many companies in practice (still) hesitate to address these issues in a structured way: where and how should one start? Especially when one's own IT landscape consists of dozens or even hundreds of systems, and the processes and data are proprietary and heterogeneous?

Unfortunately, there are no simple solutions. But there are mature data governance and data quality systems from numerous manufacturers that, with their generic concepts and great flexibility, can capture and map any "data landscape" to create the basis for successful DG and DQ management.

After all, the following applies to data quality in particular: If you can't measure it, you can't manage it.

This article will therefore focus on such a DQ system that can make data quality transparent and thus controllable. For almost three years, Bayard Consulting has been assisting an international retail group in the development and roll-out of Informatica's DQ system Data Quality.

Of course, this blog post is not intended to present the system in detail, but to give an impression of possible applications of the system, its strengths and also its weaknesses.

Therefore, we could expect that by now, every company actually devotes appropriate attention to its data, which it obviously deserves: thus, Data Governance (DG) and Data Quality Management (DQM) being the focus of the management and having become central components of entrepreneurial action; and, of course, companies being equipped with the necessary personnel.

Reality, however, looks different: in many companies, the topic of data quality still ekes out a niche existence, the importance of which is often underestimated.

But unfortunately, in no company in the world does high-quality data just occur by itself; instead, it is always the result of strategic, tactical and operational measures, namely on an organisational, process-related and technical level. In other words, sustainable DG and targeted DQM are complex challenges.

Along with financial aspects, this is perhaps one of the reasons why many companies in practice (still) hesitate to address these issues in a structured way: where and how should one start? Especially when one's own IT landscape consists of dozens or even hundreds of systems, and the processes and data are proprietary and heterogeneous?

Unfortunately, there are no simple solutions. But there are mature data governance and data quality systems from numerous manufacturers that, with their generic concepts and great flexibility, can capture and map any "data landscape" to create the basis for successful DG and DQ management.

After all, the following applies to data quality in particular: If you can't measure it, you can't manage it.

This article will therefore focus on such a DQ system that can make data quality transparent and thus controllable. For almost three years, Bayard Consulting has been assisting an international retail group in the development and roll-out of Informatica's DQ system Data Quality.

Of course, this blog post is not intended to present the system in detail, but to give an impression of possible applications of the system, its strengths and also its weaknesses.

Data Quality from Informatica

"Data Quality" from Informatica (IDQ in short) is an enterprise system for validating data.

The system is available both as an on-premises solution and in the cloud.

That one of the "top dogs" among ETL tools is predestined to also provide a powerful tool, or rather an entire toolbox, in the area of data quality is hardly surprising: because every data quality check consists of these three steps:

- Extract: Data to be checked is loaded from the source system(s).

- Transform: Data is subjected to the respective testing processes.

- Load: test results are stored.

Below, you will learn how to implement these three process steps with IDQ. ( We will not present the web-based IDQ Analyst component, with which so-called data profiling can also be carried out ad hoc by end users.)

1. Extract – flexibler Zugriff auf beliebige Datenquellen mit IDQ

An IDQ system can be integrated quickly and flexibly into any IT landscape. With its numerous so-called connectors, IDQ offers direct access to any data source: all common relational database systems, non-SQL data stores, data lakes and web services can be connected with IDQ. Usually, only configuration settings have to be made in the respective connector.

After establishing connection, so-called Logical Data Objects (LDO) encapsulate data access and hide technical details, so that all data can be processed further in a standardised manner, independent of the source system, after being imported. Of course, LDOs also allow complex data structures to be set up within IDQ for subsequent analysis. Data access can be parameterised so that maximum reusability of components is guaranteed even in complex IT landscapes.

1. Extract – flexible access to any data source with IDQ

An IDQ system can be integrated quickly and flexibly into any IT landscape. With its numerous so-called connectors, IDQ offers direct access to any data source: all common relational database systems, non-SQL data stores, data lakes and web services can be connected with IDQ. Usually, only configuration settings have to be made in the respective connector.

After establishing connection, so-called Logical Data Objects (LDO) encapsulate data access and hide technical details, so that all data can be processed further in a standardised manner, independent of the source system, after being imported. Of course, LDOs also allow complex data structures to be set up within IDQ for subsequent analysis. Data access can be parameterised so that maximum reusability of components is guaranteed even in complex IT landscapes.

2. Transform – a well-stocked library for all types of data processing

As a classic ETL system, IDQ also maps data processing by building transformation chains. The library of individual transformation modules in IDQ forms the "heart" of the application: as with comparable tools, there are of course joiner, sorter, parser or filter transformations available, which can be linked together using drag and drop. Data validations, e.g. to check the completeness, consistency and validity of data, can be configured in a simple way.

Particularly interesting in IDQ is the so-called match transformation, which allows similarities between data records to be identified using various algorithms (such as bigram, jaro or hamming distance). For complex duplicate checks to identify (unwanted) duplicates in datasets, this is a powerful tool in IDQ.

Individual transformation chains are encapsulated in IDQ in so-called mapplets, which in turn can be reused in so-called mappings. If one establishes (ideally at the start of the (I)DQ initiative) appropriate design standards and coding principles and also invests the necessary time to document implemented modules, IDQ allows component-based development, which enables short development cycles and robust runtime behaviour of the IDQ workflows.

As with any other "tool", experience in using IDQ is of course required in order not to accidentally want to "drive a nail into the wall" with a "screwdriver". In fact, however, the training in IDQ is so low in complexity that experienced software developers are able to work with it after a short time, even though deep expertise naturally has to be acquired over time.

2. Transform – eine gut sortierte Bibliothek für alle Arten der Datenverarbeitung

As a classic ETL system, IDQ also maps data processing by building transformation chains. The library of individual transformation modules in IDQ forms the "heart" of the application: as with comparable tools, there are of course joiner, sorter, parser or filter transformations available, which can be linked together using drag and drop. Data validations, e.g. to check the completeness, consistency and validity of data, can be configured in a simple way.

Particularly interesting in IDQ is the so-called match transformation, which allows similarities between data records to be identified using various algorithms (such as bigram, jaro or hamming distance). For complex duplicate checks to identify (unwanted) duplicates in datasets, this is a powerful tool in IDQ.

Individual transformation chains are encapsulated in IDQ in so-called mapplets, which in turn can be reused in so-called mappings. If one establishes (ideally at the start of the (I)DQ initiative) appropriate design standards and coding principles and also invests the necessary time to document implemented modules, IDQ allows component-based development, which enables short development cycles and robust runtime behaviour of the IDQ workflows.

As with any other "tool", experience in using IDQ is of course required in order not to accidentally want to "drive a nail into the wall" with a "screwdriver". In fact, however, the training in IDQ is so low in complexity that experienced software developers are able to work with it after a short time, even though deep expertise naturally has to be acquired over time.

3. Load – Generation of any output with IDQ

On the output side, IDQ is well positioned as the system allows to save any data (structure) as a result of the quality checks. Usually this is done in a " standard" RDBMS. However, this high degree of flexibility of the tool is of course also a challenge: it is imperative to develop a sustainable data model before starting the implementation in order to be able to draw the greatest possible benefit from the result data of the quality measurements. IDQ is indeed primarily a "toolbox" at this point - "blueprints" for a specific "IDQ building" have to be created.

The requirements that such an (I)DQ data model must meet are manifold. Above all, a data model must:

- be able to map any type of source data

- and efficiently provide the result data for operational, tactical and strategic use at different summarisation levels.In the above-mentioned IDQ project, design of such a data model and DQ KPI system was one of the central tasks Bayard Consulting was entrusted with.

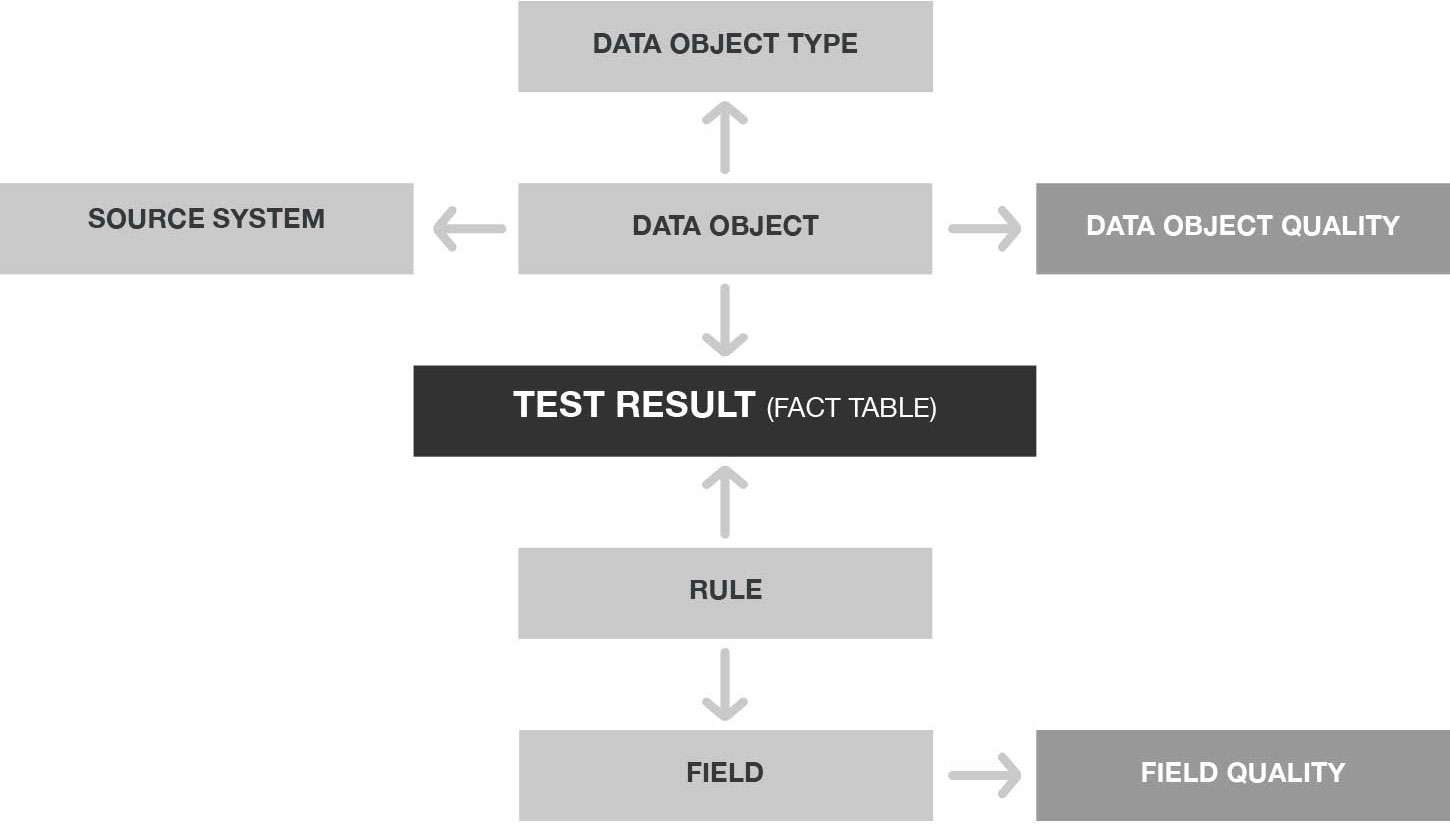

In a highly simplified form, figure 2 shows core tables of the model:

- So-called data objects (=data records) are imported from source systems into the IDQ system as "test objects" and saved with basic information.

- Each data object is assigned to a data object type in order to distinguish, for example, supplier data records from product data records for a trading company. But of course, this generic approach can be used to map any application domain.

- The rules are each assigned to exactly one (data) field that they check.

- Each execution of the IDQ workflows thus produces numerous check results that represent the binary check result (error? YES/NO) of a rule for a specific data object.

- On the one hand, these granular check results are displayed directly as error lists in order to clean up the individual data errors in the source systems during day-to-day operations. In addition, based on check results, DQ key figures are calculated for data objects (data object quality) as well as for checked fields (field quality), and can be used for tactical and strategic evaluation at different summarisation levels. For example, data quality of an entire source system, individual data object types or even individual fields can be evaluated over time in order to derive organisational, technical or procedural measures.

3. Load – Erzeugung beliebiger Ausgaben mit IDQ

On the output side, IDQ is well positioned as the system allows to save any data (structure) as a result of the quality checks. Usually this is done in a " standard" RDBMS. However, this high degree of flexibility of the tool is of course also a challenge: it is imperative to develop a sustainable data model before starting the implementation in order to be able to draw the greatest possible benefit from the result data of the quality measurements. IDQ is indeed primarily a "toolbox" at this point - "blueprints" for a specific "IDQ building" have to be created.

The requirements that such an (I)DQ data model must meet are manifold. Above all, a data model must:

- be able to map any type of source data

- and efficiently provide the result data for operational, tactical and strategic use at different summarisation levels.In the above-mentioned IDQ project, design of such a data model and DQ KPI system was one of the central tasks Bayard Consulting was entrusted with.

In a highly simplified form, figure 2 shows core tables of the model:

- So-called data objects (=data records) are imported from source systems into the IDQ system as "test objects" and saved with basic information.

- Each data object is assigned to a data object type in order to distinguish, for example, supplier data records from product data records for a trading company. But of course, this generic approach can be used to map any application domain.

- The rules are each assigned to exactly one (data) field that they check.

- Each execution of the IDQ workflows thus produces numerous check results that represent the binary check result (error? YES/NO) of a rule for a specific data object.

- On the one hand, these granular check results are displayed directly as error lists in order to clean up the individual data errors in the source systems during day-to-day operations. In addition, based on check results, DQ key figures are calculated for data objects (data object quality) as well as for checked fields (field quality), and can be used for tactical and strategic evaluation at different summarisation levels. For example, data quality of an entire source system, individual data object types or even individual fields can be evaluated over time in order to derive organisational, technical or procedural measures.

4. Front-end – visualisation of DQ results with BI systems

IDQ ist (abgesehen von der oben kurz erwähnten Analyst-Applikation) primär ein Backend-System. Das bedeutet, dass für die Visualisierung der Ergebnisse der Datenqualitätsprüfungen Dritt-Systeme integriert werden sollten, wenn sich die Endanwender nicht äußerst bescheiden mit Excel-Dateien zufriedengeben.

Prädestiniert dafür sind selbstverständlich sog. Business-Intelligence-Lösungen (BI), die es in großer Zahl am Markt gibt und die in vielen Unternehmen, unabhängig vom Thema „Datenqualität“, bereits im Einsatz sind. Neben bspw. Tableau, Microsoft PowerBI oder Qlik gehört auch Microstrategy zu diesen BI-Tools, mit denen in kurzer Zeit dynamische und flexible Dashboards und Reports erstellt werden können, um die DQ-Ergebnisse bedarfsgerecht und zielgruppen-spezifisch bereitzustellen.

4. Front-end – visualisation of DQ results with BI systems

Conclusion

Informatica's enterprise ETL system Data Quality is a powerful toolbox to gain transparency about the actual data quality in the entire IT landscape of a company and to identify existing problems.

The system's strengths lie in its excellent integration properties, which allow access to all types of data, and in its transformation library, which enables any type of data check.

The decisive factor here, however, is that the toolbox is used in a very structured, standardised and documented way in order to achieve a high degree of reusability of the components and short development cycles.

The functional scope of IDQ explicitly does not include a user-friendly front end. Therefore, connecting a BI system to visualise the DQ results is recommended.

With the right concepts in terms of result data modelling and DQ KPI system, a comprehensive database can be built up with Informatica's Data Quality: thus IDQ creates a necessary prerequisite for successful Data Quality Management.